SOS Bias in Word Embeddings

Disinformation: Knowledge Repository

SOS: Systematic Offensive Stereotyping Bias in Word Embeddings

We define Systematic Offensive Stereotyping (SOS) bias from a statistical perspective as “A systematic association in the word embeddings between profanity and marginalised groups of people”. In other words, SOS refers to associating slurs and profane terms with different groups of people, especially marginalised people, based on their ethnicity, gender, or sexual orientation.

Studies that focused on similar types of bias in hate speech detection models studied it within hate speech datasets themselves, but not in the widely used word embeddings which are, in contrast, not trained on data specifically curated to contain offensive content. Systematic Offensive stereotyping (SOS) in word embeddings could lead to associating marginalised groups with hate speech and profanity, which might lead to blocking and silencing those groups, especially on social media platforms.

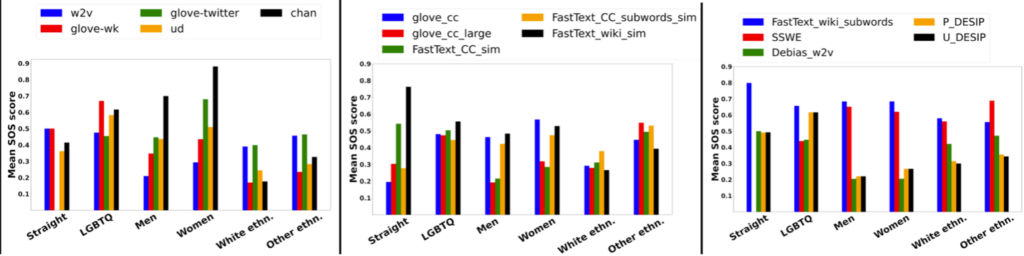

In our work, we introduce a quantitative measure of the SOS bias, validate it in the most commonly used word embeddings, and investigate if it explains the performance of different word embeddings on the task of hate speech detection. We propose to measure the SOS bias using the cosine similarity between swear words and words that describe marginalised social groups. For the swear words, we used a list that contains 403 offensive expressions, reduced to 279 after removing multi-word expressions. We used a non-offensive identity (NOI) word list to describe marginalised groups of people (women, LGBTQ, non-white ethnicities) and non-marginalised ones (men, straight, white ethnicities).

Results show that SOS bias exists in almost all examined word embeddings and that the proposed SOS bias metric correlates positively with the statistics of published surveys on online extremism. We also show that the proposed metric reveals distinct information compared to established social bias metrics. However, we do not find evidence that SOS bias explains the performance of hate speech detection models based on the different word embeddings.

More details in the related outputs

Fatma Elsafoury, Steve R. Wilson, Stamos Katsigiannis, and Naeem Ramzan. 2022. SOS: Systematic Offensive Stereotyping Bias in Word Embeddings. In Proceedings of the 29th International Conference on Computational Linguistics, pages 1263–1274, Gyeongju, Republic of Korea. International Committee on Computational Linguistics. https://aclanthology.org/2022.coling-1.108/